Delivering High Performance AI in a Low Power Footprint to Edge Applications

20.12.2023 - Running AI models on FPGAs with Risc-V

Smart sensors are increasingly being deployed in edge applications where they generate a deluge of data. This flood of data has local context, is highly time sensitive and in many cases requires low latency analysis. Here, the time, energy and cost of a round trip to a data center is not an option. Increasingly artificial intelligence (AI) is being used to extract insight within the data stream.

Edge applications are characterized by their low power consumption, small physical size and cost sensitivity. Traditional CPU, GPU and FPGA solutions lack either the power profile or the deterministic capability for real time response. Tools such as Tensorflow Lite can reduce the complexity and compute requirements of AI models for microcontroller implementations but in many cases lack the performance for real time response.

Efinix FPGAs feature a fabric architecture that delivers high compute capability in a small, low power device. This makes them a natural fit for conventional edge applications. The Titanium family of devices feature the Sapphire Risc-V processor that delivers a quad core, compute capability. Risc-V processors have an open instruction set architecture in which a few instructions are left open for the developer to define. In an FPGA design, this capability is ideal as custom instructions can be defined and implemented in hardware alongside the processor.

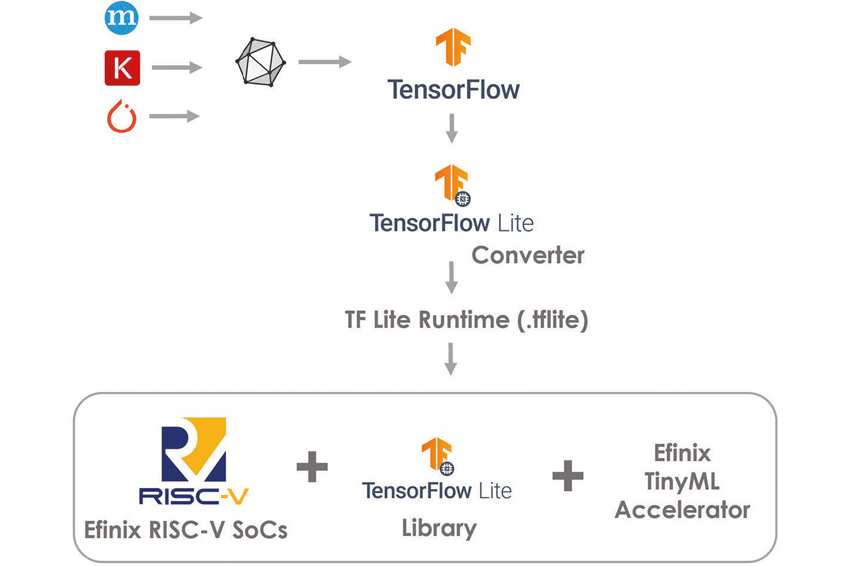

Run Tensorflow Lite Models at Almost Hardware Speed

AI models that have been quantized for edge applications using the Tensorflow Lite tool comprise a pre-defined set of primitives that are typically implemented on a microcontroller architecture using an open-source library. These quantized models can be run on the Efinix Sapphire core without further optimization. By implementing the primitives as custom instructions however, the Risc-V core can be customized to run Tensorflow Lite models at almost hardware speed. Operations instantiated in custom instructions can be made to run up to 500 times faster than the equivalent operations executed only in software. The result is a dramatic acceleration of the overall AI model.

Efinix has developed a TinyML Platform configuration tool that can analyze a Tensorflow Lite model and can recommend a series of pre-defined custom instructions. The custom instructions are instantiated in the designer’s project and can be called instead of the routines delivered with the Tensorflow runtime library. In this way, the designer can analyze and accelerate a TinyML model running on an Efinix FPGA with just a few mouse clicks.

Custom Instructions Available on the Efinix Github

In the spirit of the open-source community, Efinix has made many of the custom instructions available on the Efinix Github. Example designs, AI models and tutorials walk the designer through the process of quantizing and accelerating popular AI models.

The combination of an efficient FPGA fabric, the open-source Risc-V architecture and the open-source Tensorflow Lite community has brought the true potential of AI applications to the edge. For the first time, developers can innovate in software on the FPGA platform, can optimize their AI models to achieve the desired performance and can then cost effectively deploy them at the edge with zero NRE or lead time.

Author

Mark Oliver, Vice President of Marketing and Business Development at Efinix